The Future of Everything covers the innovation and technology transforming the way we live, work and play, with monthly issues on health, money, cities and more. This month is Artificial Intelligence, online starting July 2 and in the paper on July 9.

Facial-recognition systems, long touted as a quick and dependable way to identify everyone from employees to hotel guests, are in the crosshairs of fraudsters. For years, researchers have warned about the technology’s vulnerabilities, but recent schemes have confirmed their fears—and underscored the difficult but necessary task of improving the systems.

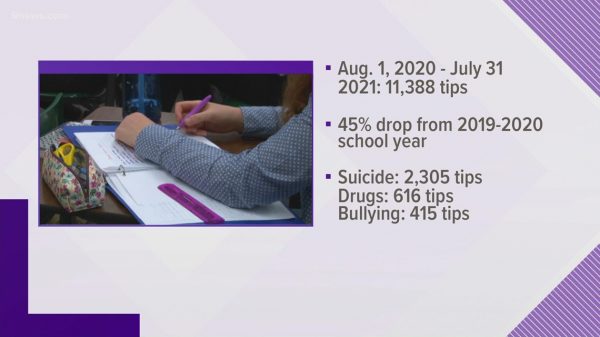

In the past year, thousands of people in the U.S. have tried to trick facial identification verification to fraudulently claim unemployment benefits from state workforce agencies, according to identity verification firm ID.me Inc. The company, which uses facial-recognition software to help verify individuals on behalf of 26 U.S. states, says that between June 2020 and January 2021 it found more than 80,000 attempts to fool the selfie step in government ID matchups among the agencies it worked with. That included people wearing special masks, using deepfakes—lifelike images generated by AI—or holding up images or videos of other people, says ID.me Chief Executive Blake Hall.

Thousands of people have used masks and dummies like these to try and trick facial identification verification, according to ID.me.

Photo:

ID.me

Facial recognition for one-to-one identification has become one of the most widely used applications of artificial intelligence, allowing people to make payments via their phones, walk through passport checking systems or verify themselves as workers. Drivers for

Uber Technologies Inc.,

for instance, must regularly prove they are licensed account holders by taking selfies on their phones and uploading them to the company, which uses

Microsoft Corp.’s

facial-recognition system to authenticate them. Uber, which is rolling out the selfie-verification system globally, did so because it had grappled with drivers hacking its system to share their accounts. Uber declined to comment.

Amazon.com Inc.

and smaller vendors like Idemia Group S.A.S.,

Thales Group

and AnyVision Interactive Technologies Ltd. sell facial-recognition systems for identification. The technology works by mapping a face to create a so-called face print. Identifying single individuals is typically more accurate than spotting faces in a crowd.

Still, this form of biometric identification has its limits, researchers say.

Why criminals are fooling facial recognition

Analysts at credit-scoring company

Experian

PLC said in a March security report that they expect to see fraudsters increasingly create “Frankenstein faces,” using AI to combine facial characteristics from different people to form a new identity to fool facial ID systems.

Facial-recognition identification works by mapping a face to create a so-called face print.

Photo:

Jamie Chung for The Wall Street Journal

The analysts said the strategy is part of a fast-growing type of financial crime known as synthetic identity fraud, where fraudsters use an amalgamation of real and fake information to create a new identity.

Until recently, it has been activists protesting surveillance who have targeted facial-recognition systems. Privacy campaigners in the U.K., for instance, have painted their faces in asymmetric makeup specially designed to scramble the facial-recognition software powering cameras while walking through urban areas.

Criminals have more reasons to do the same, from spoofing people’s faces to access the digital wallets on their phones, to getting through high-security entrances at hotels, business centers or hospitals, according to Alex Polyakov, the CEO of Adversa.ai, a firm that researches secure AI. Any access control system that has replaced human security guards with facial-recognition cameras is potentially at risk, he says, adding that he has confused facial-recognition software into thinking he was someone else by wearing specially designed glasses or Band-Aids.

A growing threat

The idea of fooling these automated systems dates back several years. In 2017, a male customer of insurance company Lemonade tried to fool its AI for assessing claims by dressing in a blond wig and lipstick, and uploading a video saying his $5,000 camera had been stolen. Lemonade’s AI systems, which analyze such videos for signs of fraud, flagged the video as suspicious and found the man was trying to create a fake identity. He had previously made a successful claim under his normal guise, the company said in a blog post. Lemonade, which says on its website that it uses facial recognition to flag claims submitted by the same person under different identities, declined to comment.

SHARE YOUR THOUGHTS

Should organizations suspend the use of facial-recognition systems? Why or why not? Join the conversation below.

Earlier this year, prosecutors in China accused two people of stealing more than $77 million by setting up a fake shell company purporting to sell leather bags and sending fraudulent tax invoices to their supposed clients. The pair was able to send out official-looking invoices by fooling the local government tax office’s facial-recognition system, which was set up to track payments and crack down on tax evasion, according to prosecutors cited in a March report in the Xinhua Daily Telegraph. Prosecutors said in a posting on the Chinese chat service

that the attackers had hacked the local government’s facial-recognition service with videos they had produced. The Shanghai prosecutors couldn’t be reached for comment.

The pair bought high-definition photographs of faces from an online black market, then used an app to create videos from the photos to make it look like the faces were nodding, blinking and opening their mouths, the report says.

The duo, who had the surnames Wu and Zhou, used a special mobile phone that would turn off its front-facing camera and upload the manipulated videos when it was meant to be taking a video selfie for Shanghai’s tax system, which uses facial recognition to authenticate tax returns, the report says. Wu and Zhou had been operating since 2018, according to prosecutors.

Spoofing a facial-recognition system doesn’t always require sophisticated software, according to John Spencer, chief strategy officer of biometric identity firm Veridium LLC. One of the most common ways of fooling a face-ID system, or carrying out a so-called presentation attack, is to print a photo of someone’s face and cut out the eyes, using the photo as a mask, he says. Many facial-recognition systems, such as the ones used by financial trading platforms, check to see if a video shows a live person by examining their blinking or moving eyes.

Most of the time, Mr. Spencer says, his team could use this tactic and others to test the limits of facial-recognition systems, sometimes folding the paper “face” to give it more perceived depth. “Within an hour I break almost all of [these systems],” he says.

Apple Inc.’s

Face ID, which was launched in 2017 with the iPhone X, is among the most difficult to fool, according to scientists. Its camera projects more than 30,000 invisible dots to create a depth map of a person’s face, which it then analyzes, while also capturing an infrared image of the face. Using the iPhone’s chip, it then processes that image into a mathematical representation, which it compares with its own database of a user’s facial data, according to Apple’s website. An Apple spokeswoman says that the company’s website states that for privacy reasons, Face ID’s data never leaves an iPhone.

Some banks and financial-services companies use third-party facial-identification services, not Apple’s Face ID system, to sign up customers on their iPhone apps, Mr. Spencer says. This is potentially less accurate. “You end up looking at regular cameras on a mobile phone,” he says. “There’s no infrared capability, no dot projectors.”

Many online-only banks ask users to upload video selfies alongside a photo of their driver’s licenses or passports, and then use a third party’s facial-recognition software to match the video to the ID. The images sometimes go to human reviewers if the system flags something wrong, Mr. Spencer says.

Seeking a solution

Mr. Polyakov regularly tests the security of facial-recognition systems for his clients and says there are two ways to protect such systems from being fooled. One is to update the underlying AI models to beware of novel attacks by redesigning the algorithms that underpin them. The other is to train the models with as many examples as possible of the altered faces that could spoof them, known as adversarial examples.

ID.me says that people also used computer-generated images such as this one to try to fraudulently claim unemployment benefits.

Photo:

ID.me

Unfortunately, it can take 10 times the number of images needed to train a facial-recognition model to also protect it from spoofing—a costly and time-consuming process. “For each human person you need to add the person with adversarial glasses, with an adversarial hat, so that this system can know all combinations,” Mr. Polyakov says.

Companies such as Google,

and Apple are working on finding ways to prevent presentation attacks, according to Mr. Polyakov’s firm’s analysis of more than 2,000 scientific research papers about AI security. Facebook, for instance, said last month that it is releasing a new tool for detecting deepfakes.

ID.me’s Mr. Hall says that by this past February, his company was able to stop almost all of the fraudulent selfie attempts on the government sites, bringing the number that got through down to single digits from among millions of claims. The company got better at detecting certain masks by labeling images as fraudulent, and by tracking the device, IP addresses and phone numbers of repeat fraudsters across multiple fake accounts, he says. It also now checks how the light of a smartphone reflects and interacts with a person’s skin or another material. The attempts at face-spoofing have also declined. “[Attackers] are typically unwilling to use their real face when committing a crime,” Mr. Blake says.

—Liza Lin and Qianwei Zhang contributed to this article.

Write to Parmy Olson at parmy.olson@wsj.com

Copyright ©2020 Dow Jones & Company, Inc. All Rights Reserved. 87990cbe856818d5eddac44c7b1cdeb8